What is Rate Limiting?

In distributed systems and network management, controlling the rate of traffic flow is crucial for maintaining system stability and availability. Controlling the flow and rate of the traffic is known as Rate Limiting. Not having rate limiting may lead to exploitation of systems and high downtime.

I remember in one of my recent projects where I built a video transcoder application, I wasn't worried about rate limiting, and due to this, the system was exploited by a user that uploaded 1,000 videos and ended up in downtime.

To implement rate limiting we have two popular strategies which are Token Bucket and Leaky Bucket. In this article, we are going to explore both of these strategies in detail which will help us understand which is the right one for us.

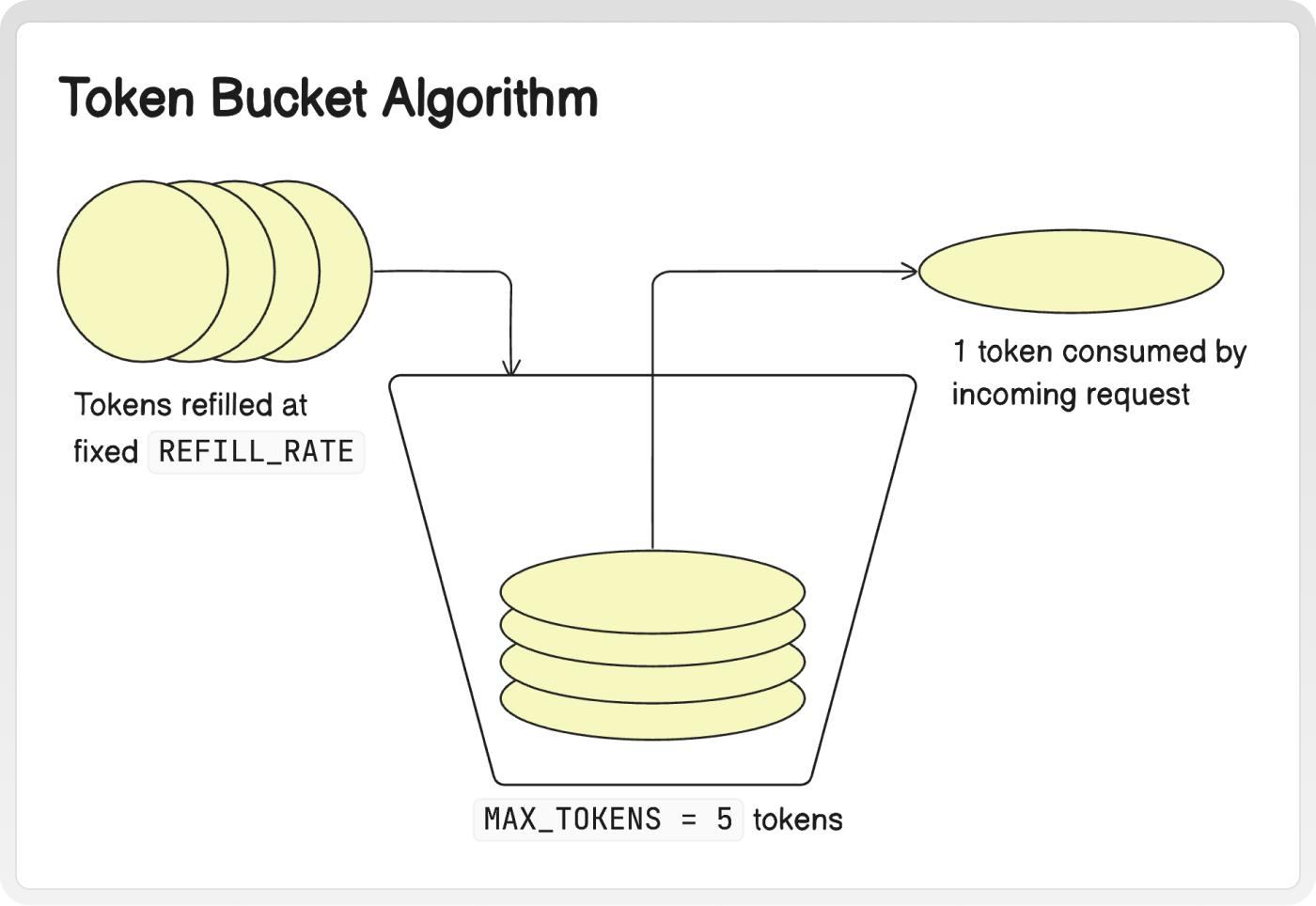

Token Bucket Algorithm

The Token Bucket algorithm uses a bucket of tokens to limit and regulate the flow of requests. To understand this algorithm, imagine there is a bucket containing a fixed number of tokens. Tokens in this bucket are added at a fixed rate and when a request arrives at the server, a token must be consumed from the bucket. If there are no tokens available, the request can be either rejected or delayed until tokens are available in the bucket.

const MAX_TOKENS = 5;

const REFILL_RATE = 5000; // Milliseconds between token refills (5 seconds)

const bucket = {

tokens: MAX_TOKENS,

hasTokens: function () { // Checks if we have tokens available

return this.tokens > 0;

},

consumeToken: function () { // Function to consume a token

if (this.hasTokens())

this.tokens -= 1;

},

releaseToken: function () { // Function to release a token

if (this.tokens < MAX_TOKENS)

this.tokens += 1;

}

};

async function handleIncomingRequest(requestId) {

if (!bucket.hasTokens()) {

console.log('Out of tokens! Please try again later', requestId);

return;

}

bucket.consumeToken();

console.log('✅ Processing Request...', requestId);

await waitFor(2 * 1000); // Simulate a fake wait time

return true;

}

function waitFor(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

setInterval(() => {

if (!bucket.hasTokens())

bucket.releaseToken();

}, REFILL_RATE); // Add back tokens once every REFILL_RATE milliseconds!Leaky Bucket Algorithm

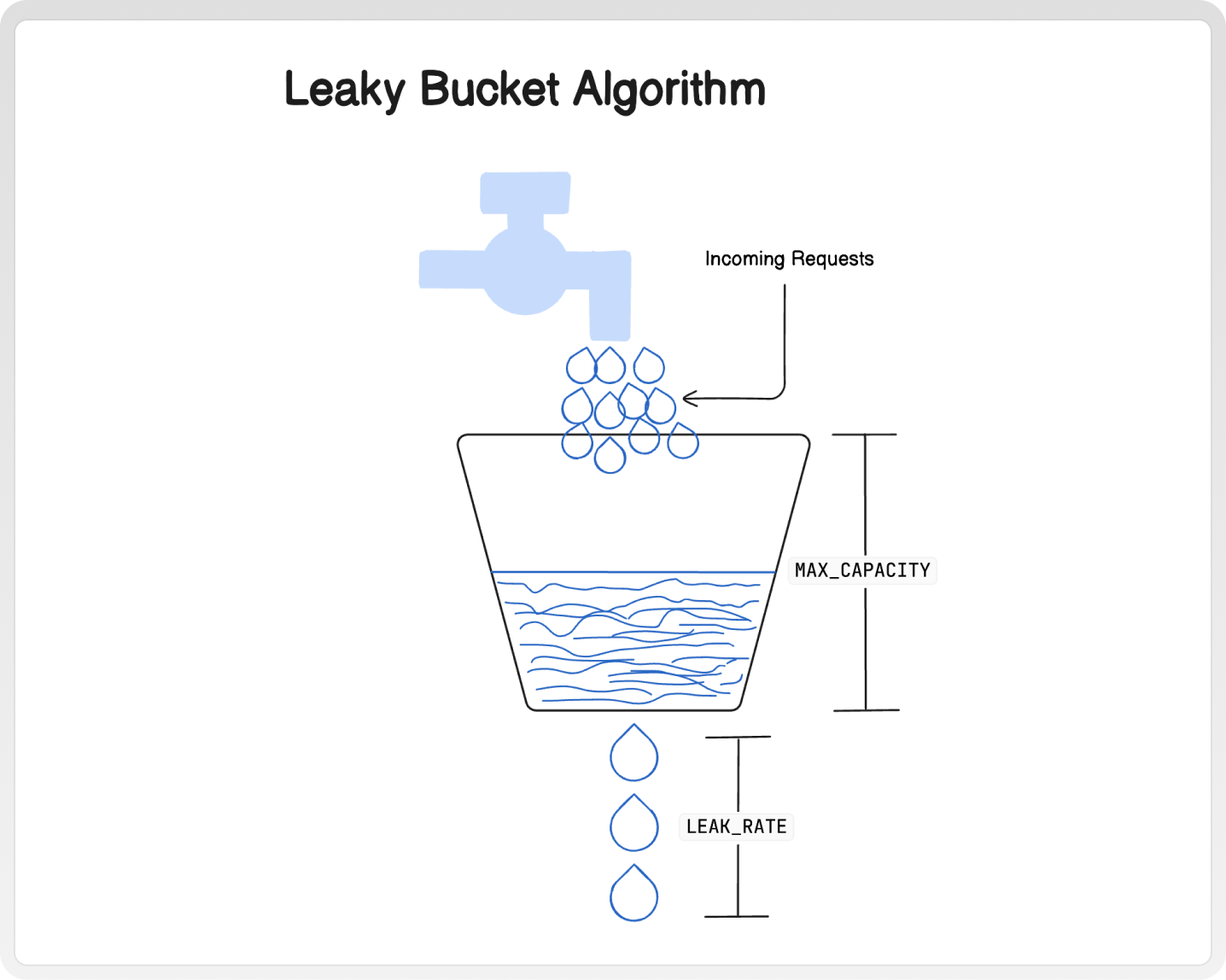

The Leaky Bucket algorithm works on the principle of a physical leaky bucket. Incoming requests are added to the bucket, and the bucket has a fixed capacity. If the bucket overflows, the excess requests are either dropped or delayed. The requests in the bucket are then processed at a fixed rate.

To grasp this idea, think of a bucket filled with water. There's a small hole at the bottom. Water keeps pouring in from the top. If the bucket gets too full, it spills over. The hole at the bottom only lets out 1 drop of water every second, which represents processing speed.

const BUCKET_CAPACITY = 10; // Max requests the bucket can hold

const LEAK_RATE = 1000; // Milliseconds between requests processing

let leakyBucket = {

requests: [],

addRequest: function(requestId) {

if (this.requests.length < BUCKET_CAPACITY) {

this.requests.push(requestId);

console.log(`📥 Request ${requestId} added.`);

return true;

} else {

console.log(`❌ Bucket full. Request ${requestId} dropped.`);

return false;

}

},

processRequest: function() {

if (this.requests.length > 0) {

const requestId = this.requests.shift();

console.log(`✅ Processing Request ${requestId}`);

return true;

}

return false;

}

};

function handleIncomingRequest(requestId) {

if (!leakyBucket.addRequest(requestId)) {

console.log('Request could not be processed. Try again later.');

}

}

setInterval(() => {

leakyBucket.processRequest();

}, LEAK_RATE); // Processes one request per LEAK_RATEThe Pros and Cons

Now that we know the basics of both the Token Bucket and Leaky Bucket Algorithm, let's discuss the pros and cons of the two.

Token Bucket

- Pros

- Provides more control over the request processing rate with

MAX_CAPACITYandREFILL_RATEas parameters of the token bucket. - Allows a short burst of traffic exceeding the

REFILL_RATEto be processed as long as tokens are available in the bucket.

- Provides more control over the request processing rate with

- Cons

- Requires additional overhead for token management.

- Can be exploited by greedy clients.

Leaky Bucket

- Pros

- Constant output which is simply determined by

LEAK_RATE. - Predictable behavior allows for easier maintenance.

- Constant output which is simply determined by

- Cons

- Limited flexibility in adjusting to varying traffic patterns (e.g. cannot quickly process small bursts exceeding the

LEAK_RATE).

- Limited flexibility in adjusting to varying traffic patterns (e.g. cannot quickly process small bursts exceeding the

Making a Decision

Choosing the right rate-limiting algorithm can be tricky. Here are some simple guidelines to help you decide which algorithm fits best for your needs.

Use Token Bucket When:

- You want more granular control over how fast requests are processed.

- Your traffic patterns change frequently and you need to be adaptive.

- You want more control over distributing limited resources among users beyond first-come-first-served.

Use Leaky Bucket When:

- You want a straightforward way to manage consistent traffic flow.

- Keeping your implementation simple and light is a priority.

- You want to ensure sudden spikes in traffic don't overwhelm your system.

Real-World Examples

Customer Helpline (Token Bucket Algorithm)

Let's say you're calling a customer helpline, and the company uses a token bucket system to manage incoming calls. Each person who calls consumes a token, and the bucket represents the capacity the helpline can handle at once. When you call, if there are tokens available (operators free to take your call), you'll get connected right away. But if all the tokens are currently being used (all operators are busy), you'll have to wait until someone finishes their call and a token becomes available again.

Tokens: Each operator in the customer helpline call center represents a token.

Bucket: The bucket represents the capacity of the customer helpline to handle concurrent calls, or the maximum number of callers the helpline can assist simultaneously.

Token Availability: When a customer initiates a call to the helpline, it needs to consume a token. If there are available tokens in the bucket (operators are free), the incoming call is promptly connected.

Token Exhaustion: However, if the bucket reaches its maximum capacity because all operators are busy assisting other customers, there are no available tokens left. In this scenario, the incoming call will have to wait until one of the ongoing conversations concludes, freeing up a token.

YouTube Video Processing System (Leaky Bucket Algorithm)

Imagine YouTube receives a constant stream of video uploads from users worldwide. To ensure that the video processing system doesn't get overwhelmed and can handle the incoming videos efficiently, YouTube implements a leaky bucket algorithm within its message queue system.

Here's how it works:

Bucket: In this scenario, the bucket represents the message queue where incoming video upload requests are stored.

Water (Messages): The water represents the video upload requests waiting in the message queue to be processed.

Hole (Processing Rate): The hole at the bottom of the bucket represents the maximum processing rate at which YouTube's servers can handle video uploads.

TL;DR

Rate limiting is crucial for system stability and availability. The two main algorithms, Token Bucket and Leaky Bucket, control traffic flow. Token Bucket processes the requests with a variable amount of available tokens at any given time, while Leaky Bucket processes requests at a constant rate like a leaking bucket.

Token Bucket

- Pros: Granular control, can process temporary bursts.

- Cons: Token management overhead, susceptible to exploitation.

Leaky Bucket

- Pros: Predictable, constant output, protects against large bursts.

- Cons: Less flexible, no adaptation to dynamic traffic.

.png)

.svg)

.svg)

.svg)